2.9 KiB

2.9 KiB

Todo

- Im forum anmelden

- research about initial toml file for proxmox for unattended confiuration of pve - 'proxmox autoinstaller'

- test zraid1 on two ssd's at home with 'turtle' proxmox host

Introduction

basis

- Debian stable + Ubuntu Kernel

- Virtualisierung mit KVM

- LXC Container

- zfs und ceph unterstuetzt

features

- snapshots

- kvm virt (windows, linux, bsd)

- lxc container

- HA clustering

- live migration

- flexible storage-wahlmoeglichkeiten

- gui management

- proxmox datacenter manager

- proxmox backup server

Support Subscriptions

basic

1cpu pro jahr/ 2cpu pro jahr

- zugriff auf enterprise-repos

- stable software updates

- support ueber das kundenportal

- drei support tickets

- reaktionszeit: 1 werktag

standard

1cpu pro jahr/ 2cpu pro jahr

- alle basic leistungen

zusaetzlich

- 7 support tickets (total 10)

- remote support (via ssh)

- offline subscrition keyactivation

premium

1cpu pro jahr/ 2cpu pro jahr

- alle standard elistungen

zusaetzlich

- unbegrentzt tickets

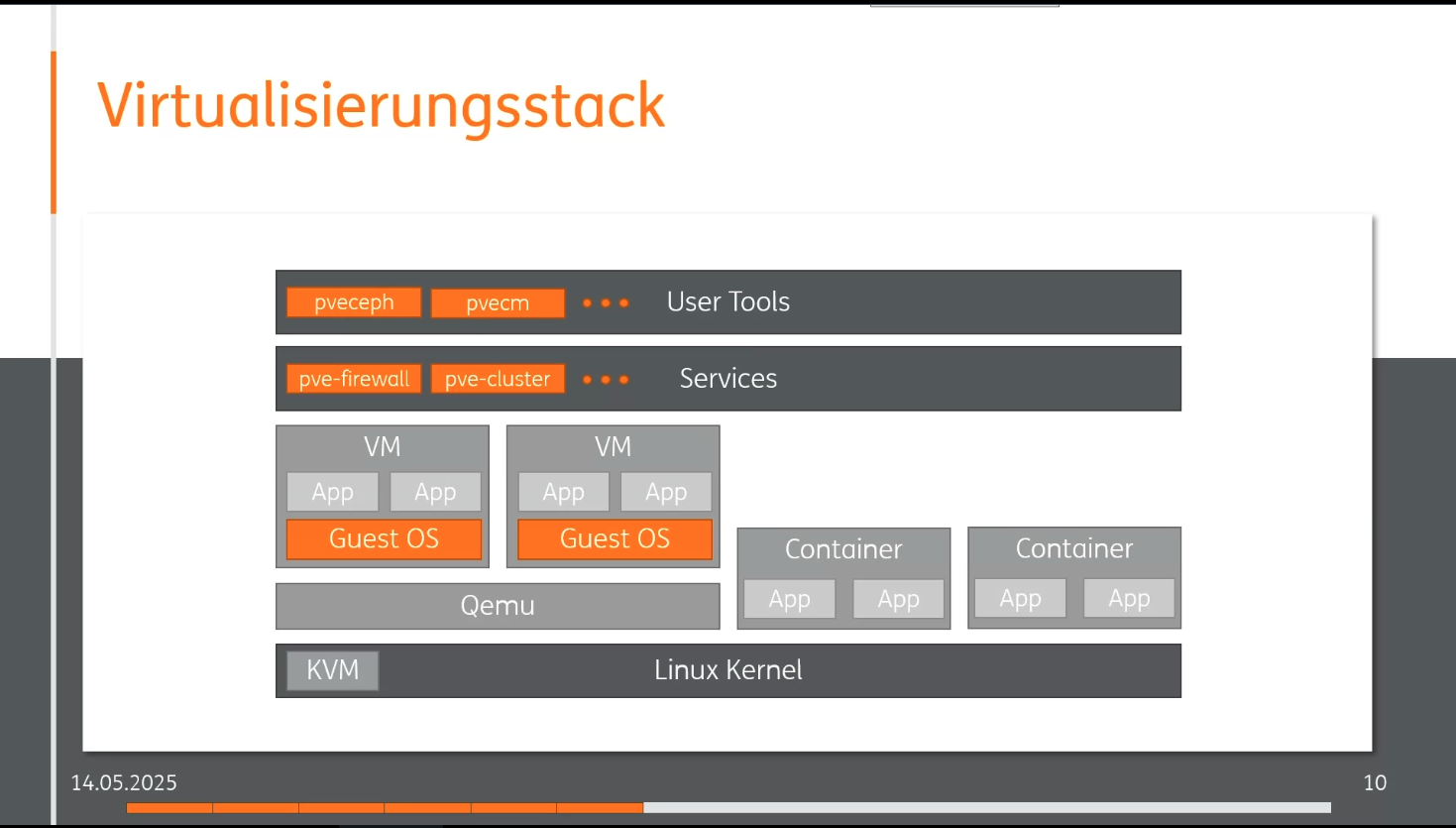

virtualisierungsstack

Ausbau-Varianten

single node

- storage pool

- raid controller with lvm - ceph and zfs dont support raid controller - if raid controller used change to mode and use zfs

Clustering ohne HA

- several storage pools

- pools not shared - individual

zfs cluster with asynchron replication

- at least two pools

- in between quorum device which replicates the config between the nodes

ceph cluster

- at least 3 nodes

- pool is combined and shared

Installation

- its possible to provide custom toml files to install proxmox automatically

- zfs raid1 for boot drive

- define correct hostname; not so easy to change later

- modify update repos as first step! and update/upgrade the system

- hardware: always better to have as many interface ports as possible

- 2x 1 Gbit for mgmt

- 2x 10 Gbit for VMs (redundant, bond via LACP- {needs stacked switches} or backup-mode)

- 2x 25 Gbit for Ceph storage clustering

- single node at least 4 ports and for clustering at least 6 ports

- remove ip address of virtual bridge and set it directly on physical interface. Thus the VMs cant see the proxmox ip

- bond ports for VMs (ideally tow 10 Gbit ports) and setp virtual bridge on bond0 so that it can be used by VMs ( set 'VLAN aware' at bridge)

- its possible to create a bond for the web ui port

VM creation

- id ranges can be configured in datacenter manager

- set tags at creation

- choosing guest os type properly as some features are directly set correctly; otherwise performance issues can arrise

- qemu agent recommended to install. improves snapshots and other stuff

- set at disk settings always: 'discard' and 'ssd emulation'. Otherwise ceph or other clustering solutions dont properly about changes on the disks

- best practice is to use virtio for storage and for network as it is the fastest